Kubernetes and AI: Orchestrating Machine Learning Workloads

THE DYNAMIC DUO OF MODERN IT

In an ever evolving IT landscape, Kubernetes and Artificial Intelligence (AI) have emerged as a powerful combination, especially in the realm of machine learning. Kubernetes, an open-source container orchestration platform, enables scalable, automated deployment and management of applications, while AI, with its data-hungry models and algorithms, demands exactly that kind of dynamic environment for training and inference tasks. Together, they create a perfect synergy for modern IT infrastructures.

Kubernetes simplifies the deployment of machine learning models by managing complex AI workloads, scaling them across distributed computing environments, and ensuring efficient use of resources like GPUs and TPUs. It helps handle the unique challenges of machine learning, such as model versioning, continuous integration, and automating repeatable tasks like data preprocessing and model retraining.

AI workloads benefit from Kubernetes’ ability to handle distributed training and to optimize resource allocation in a multi-cloud or hybrid environment. As more businesses integrate AI into their operations—whether for real-time analytics, decision-making, or automation—Kubernetes provides the necessary framework to scale these AI applications while maintaining agility and cost efficiency.

This pairing is a game-changer, allowing organizations to fully harness the power of AI and machine learning without being bogged down by infrastructure management. Kubernetes not only makes AI adoption smoother but also future-proofs IT ecosystems for more advanced use cases like AI-driven automation and edge computing.

Let’s take a little deep dive into how Kubernetes can be used to automate and scale AI as well as machine learning (ML) workflows.

Kubernetes is an open-source code container orchestration plaform that automates the deployment, scaling, and management of containerized applications. When it comes to machine learning, this platform provides the robust infrastructure needed to handle the complexities of ML workflows, which typically involve data ingestion, preprocessing, model training, validation, and deployment.

Kubeflow is a comprehensive toolkit designed to run ML workflows on Kubernetes. It simplifies the process of developing, orchestrating, deploying, and running scalable and portable ML workloads. A Kubeflow pipeline is used to define and manage the ML workflows, in a sequence of steps, using Python, that can be visualized as Directed Acyclic Graphs (DAGs). With these pipelines, the entire ML lifecycle can be automated, using triggers based on new data, etc.

By leveraging Kubernetes’ native support for distributed sytems (such as TensorFlow, PyTorch, and MPI) and its built-in mechanisms for scaling applications (HPA and VPA) you can run large-scale training jobs across multiple nodes in the most efficient manner.

Effective monitoring and logging are crucial for managing ML workflows, so the use of one of the various monitoring tools that can integrate with Kubernetes is essential (such as Prometheus, Grafana, or ELK Stack). These tools can be used to monitor the performance of the workflows and custom metrics can be set up to track the progress of training jobs, resource utilization, etc.

Running ML workloads on Kubernetes also involves addressing security and compliance requirements. Role-based access control (RBAC) allows, for example, to define fine-grained access controls for different components of the ML pipeline and Network Policies enable controlled traffic flows between different pipeline components.

Additionally, attention should be paid to resource utilization (ensuring CPU, memory, and GPU resources are used efficiently) as well as efficient resource sharing among multiple machine learning tasks in combination with dynamic resource allocation, based on the needs of different ML workflow tasks. Followed by a seamless integration with CI/CD pipelines to streamline the process of deploying ML models into production.

ADDRESSING THE CHALLENGE

Leveraging the full potential of Kubernetes and AI to drive efficiency and innovation in your operations can seem overwhelming. Core pillars that need to be considered include expert guidance, customized solutions, ongoing support and maintenance, resource optimization models, as well as deep knowledge of security and compliance procedures. However, don’t let the complexity hold you back, feel free to discuss how your company can harness the power of this dynamic duo together with us.

With a deep understanding of all the complexities involved, Sky-E Red ensures that your infrastructure is scalable, secure, and future-proof.

In the meantime, let’s take a closer look at how many companies across various industries are already leveraging Kubernetes to power their AI initiatives, along with the challenges and successes they encountered along the way.

REAL-WORLD EXAMPLES

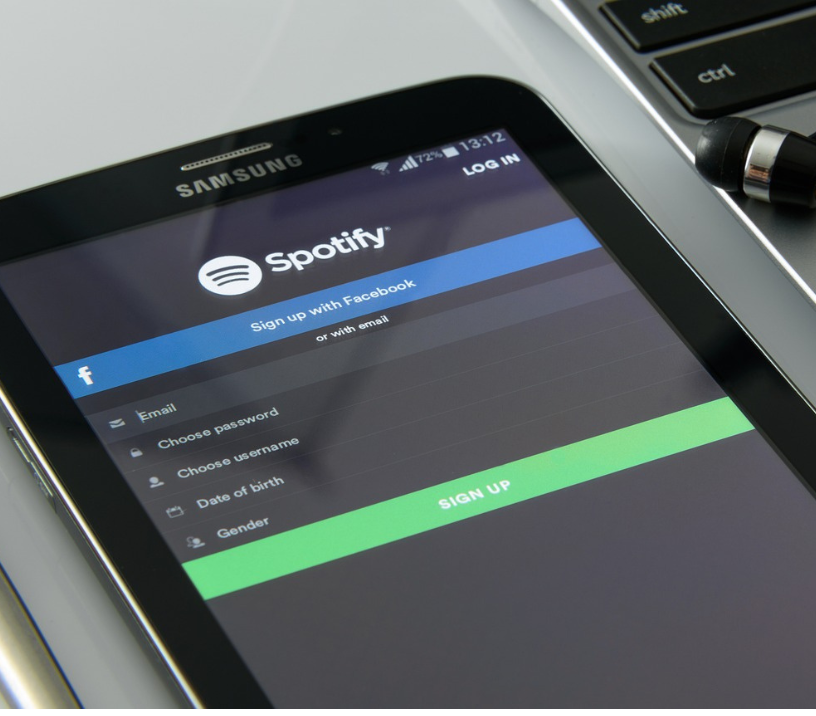

Spotify : Music Recommendations

Spotify's recommendation engine, which personalizes music suggestions, relies on vast amounts of data processed in real-time. By containerizing their AI models and running them on Kubernetes, they were able to scale their AI infrastructure on demand. Initially, the complexitiy of their distributed systems across multiple cloud providers provided a challenge; however, Kubernetes allowed them to streamline their resource managament across different environments, ensuring the reliability of their recommendation systems, even during traffic spikes, across all platforms.

Uber : Demand Forecasting & Autonomous Driving

Uber uses Kubernetes to manage the development of their ML models for demand forecasting, route optimization, and autonomous driving to provide the orchestration needed to run and scale AI models dynamically across multiple data centers. The immense of amount of data generated by autonomous vehicles provided the biggest challenge in the beginning: they needed a system that could rapidly process the data to train models in real-time. With Kubernetes, Uber was able to automate the deployment of new AI models, based on demand, and updates without disrupting operations and while enhancing their predictive algorithms.

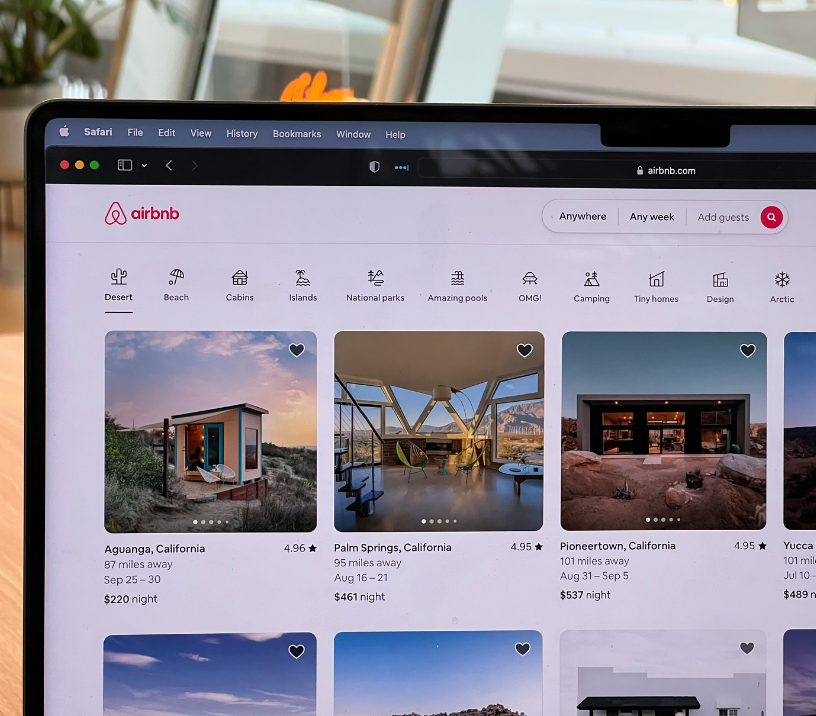

Airbnb : Search & Personalization

With the help of Kubernetes, Airbnb supports the AI models that power its search algorithms and recommendation systems. Their platform uses deep learning models to personalize search results. Especially peak traffic periods and managing the distributed pipelines across multiple regions, which added an extra layer of complexity, were challenges the company was able to tackle with the help of Kubernetes, enabling Airbnb to automate the deployment while also scaling it effectively across different geographical locations, ensuring higher user engagement and customer satisfaction.

Pinterest : Visual Search & Content Discovery

Pinterest uses Kubernetes to help them deploy and manage deep learning models that process vast amounts of visual data and deliver personalized recommendations to users. It faced challenges in scaling its machine learning infrastructure to support a constantly growing user base, especially when training complex AI models that required high computing resources. By adopting Kubernetes, Pinterest was able to scale models automatically based on demand, which did not only improve search and recommendation systems but also led to faster development cycles.

BMW Group : AI in Manufacturing

BMW uses Kubernetes to manage AI workfloads in both its manufacturing processes and autonomous vehicle developments. The complexitiy of managing hybrid cloud environments and integrating AI into a global production network initially posed significant challenges; however, Kubernetes enabled the deployment of standardized and automated AI models both on-premise and in the cloud, leading to increased manufacturing efficiency.

In summary, the complexitiy of managing distributed systems, real-time scaling of resource-intensive AI models, as well as handling vast datasets, and the cost effective running of large AI workloads, escpecially in the cloud, are challenges a lot of businesses will have to face when they start navigating the complex AI landscape to develop custom solutions for their machine learning needs. However, we are always happy to help, one dataset at a time!

This way, you can make sure you take full advantage of the power of Kubernetes during the process:

- Scaling AI models dynamically based on demand, improving efficiency and cost-effectiveness.

- Using Kubernetes’ automation features, such as self-healing, load balancing, and auto-scaling, to reduce the manual effort required to manage the AI infrastructure.

- Quickly iterating and deploying new AI models, speeding up time to market.

- Managing containerized workloads across multiple environments to improve uptime and system resilience.

KUBERNETES, AI, AND THE FUTURE

The successes in terms of scalability, automation, and efficiency have positioned Kubernetes as a critical technology for AI initiatives. As AI workloads become more complex, scalable, and integral to business operations, Kubernetes will continue to play a critical role in managing these environments. Particularly in machine learning, where it simplifies scaling ML mdodels and orchestrates complex workloads, handling containerized applications and managing distributed training for AI models, therefore automating the entire ML pipeline—from data preprocessing to model deployment. So let’s take a look at some key trends and technologies that could shape how Kubernetes is used for AI in the coming years and why it might be a good idea for your company, to join the “Kubernetes-Team” ahead of the curb.

Edge Computing and AI

Kubernetes is being adapted to manage workloads on edge devices through tools like K3s and MicroK8s, since AI models running on edge devices (such as sensors or IoT devices) require decentralized processing power to reduce latency and bandwidth usage.

Serverless AI on Kubernetes

The serverless computing model, where resources are automatically managed and scaled without manual infrastructure management, is gaining traction for AI workloads. Kubernetes tools (i.e., Kubeless, Knative) extend its capabilities into the serverless domain, enabling AI models to be deployed in a function-as-a-service (FaaS) model, turning Kubernetes into a powerful way to efficiently allocate resources for real-time data streams.

AI Model Management and Lifecycle Automation

AI model development involves a complex lifecycle, including training, testing, deployment, monitoring, and updating. Kubernetes-based MLOps tools are gaining popularity for automating the AI model lifecycle, helping to deploy AI at scale with reduced manual intervention – even multiple models across a variety of environments.

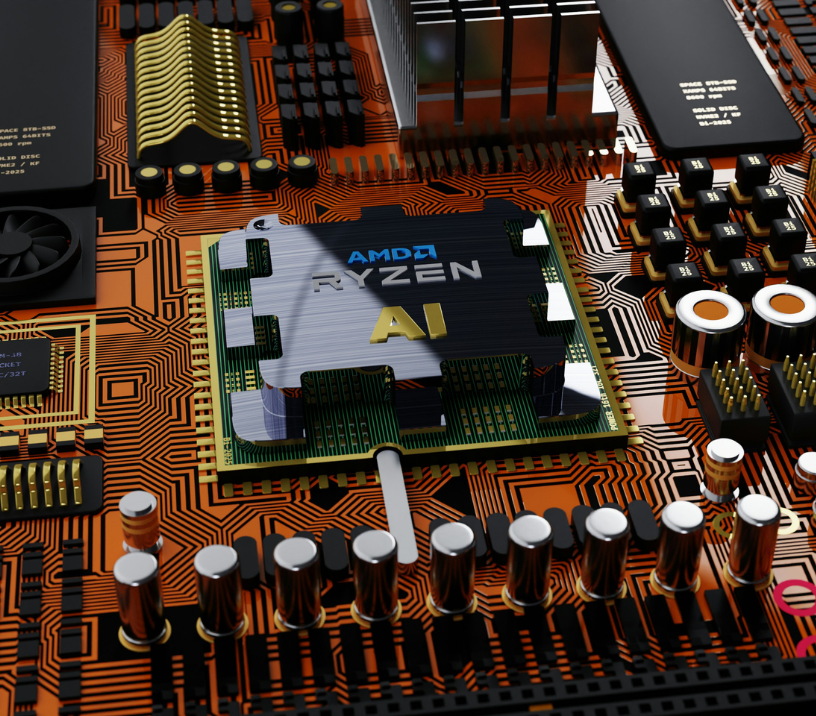

Integration of AI-Specific Hardware (GPUs, TPUs, and FPGAs)

AI workloads, particularly deep learning, require specialized hardware for faster computation. Kubernetes is evolving to manage and schedule these workloads, for example, it can now intelligently schedule AI training jobs to run on nodes equipped with GPUs, optimizing resource use. As more AI models rely on hardware accelerators, Kubernetes will continue evolving to optimize performance etc. for these devices.

Hybrid Cloud AI Solutions

Many organizations are adopting hybrid cloud strategies, where AI workloads run across both on-premises data centers and cloud environments. Kubernetes is already a key enabler for hybrid cloud projects and as the AI adoption grows, Kubernetes will likely become even more critical for hybrid cloud deployments, enabling companies to run AI workloads where it makes the most sense – whether due to cost, compliance, or performance considerations.

AI-Driven Kubernetes Orchestration

AI itself is being applied to optimize Kubernetes operations, creating self-optimizing clusters that automatically adjust resource allocation, scaling, and workload balancing. Auto-tuning tools for Kubernetes are emerging, leveraging AI and machine learning to optimize cluster performance in real-time, which will, over time, most likely result in AI-driven self-healing clusters that can predict failures and adjust configurations autonomously.

Security and Compliance for AI Workloads

As AI systems become more integrated into business processes, ensuring their security and compliance will be critical. Kubernetes is incorporating more features to enhance security and making it easier to trace and audit AI workflows for compliance purposes. This ensures that security frameworks with Kubernetes will stay up-to-date to meet the demands of sensitive AI applications, particularly in regulated industries like finance, healthcare, and government.

Federated Learning on Kubernetes

Federated learning, where AI models are trained across decentralized data sources without actually sharing data, is gaining popularity for privacy-focused AI applications. Kubernetes can manage these architectures by orchestrating decentralized AI training jobs across different environments, deploying the models close to data sources (e.g., mobile devices, edge locations) to enable privacy-conscious machine learning.

Cost-Optimization for AI at Scale

Kubernetes’ resource management capabilities, including auto-scaling, spot instance support, and cost monitoring, allow organizations to optimize the use of cloud resources for AI workloads. Enhanced cost optimization features by Kubernetes are sure to continue to evolve, making it highly likely that more intelligent cost-management solutions will emerge to integrate directly with Kubernetes clusters running AI models.

FOOD FOR THOUGHT

The future of Kubernetes in the AI landscape is bright, with emerging technologies like edge computing, serverless AI, and so much more set to redefine how AI workloads are managed and scaled. Kubernetes will continue to evolve, becoming even more integral to AI-driven organizations by providing the necessary infrastructure needed.

As these trends mature, Kubernetes will remain at the heart of AI orchestration; however, companies should embrace Kubernetes as early as possible, even without large AI initiatives that are currently running or on the horizon. That is because the K8s system lays the foundation for scalability, flexibility, and cost-efficiency across all operations—not just AI. It allows businesses to containerize and manage their applications, streamlining deployment processes and making future integrations with AI projects much smoother. By adopting Kubernetes now, companies can ensure that when AI demands grow, they already have the infrastructure in place to handle complex workloads, reducing the need for expensive overhauls later on. Additionally, Kubernetes’ ability to manage distributed systems ensures that companies can scale any future AI or non-AI projects efficiently, making it a forward-thinking investment towards digital transformation.

With Kubernetes as a foundational layer, AI initiatives become easier to scale, deploy, and manage, enabling companies to focus on innovation and efficiency while ensuring seamless integration into existing IT infrastructures.

Let us know if you have any questions or are ready to get started to discover the possibilities for using Kubernetes and AI in your business processes!